The Applied Study of Over-parameterized Neural Networks

Check out my paper!

Background and abstract

My paper is based on On the Power of Over-parametrization in Neural Networks with Quadratic Activation. This paper by Du and Lee performed the theoretical deductions of the property of over-parameterized one-layer networks with quadratic activation to have all local minima as global minima. My paper performs an empirical analysis of their paper and extended the activation functions to ReLU. My conclusions suggests that 'over-parameterized definition' of their paper is weak as many other cases showcase the same property of global convergence. I explored the boundary for such property in one-layer network with quadratic and ReLU activation.

Sample images

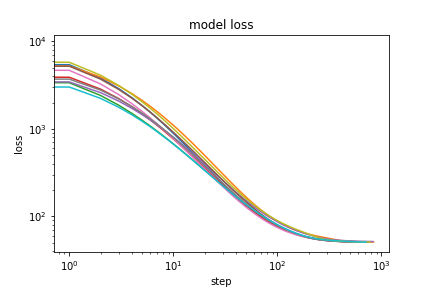

NN with Quadratic activation's loss (log)

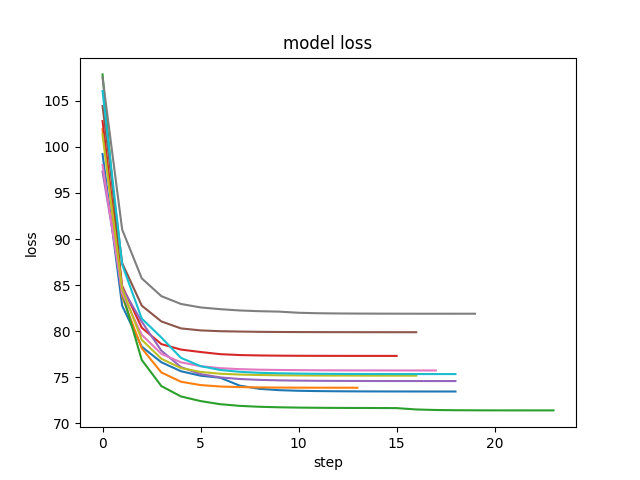

NN with ReLU activation's loss

Evaluation by my Professor

Check out the Evaluation